Summary

We make multiple attempts to convert a 2D symbolic map into a 3D imagined landscape, working purely with ChatGPT

Background

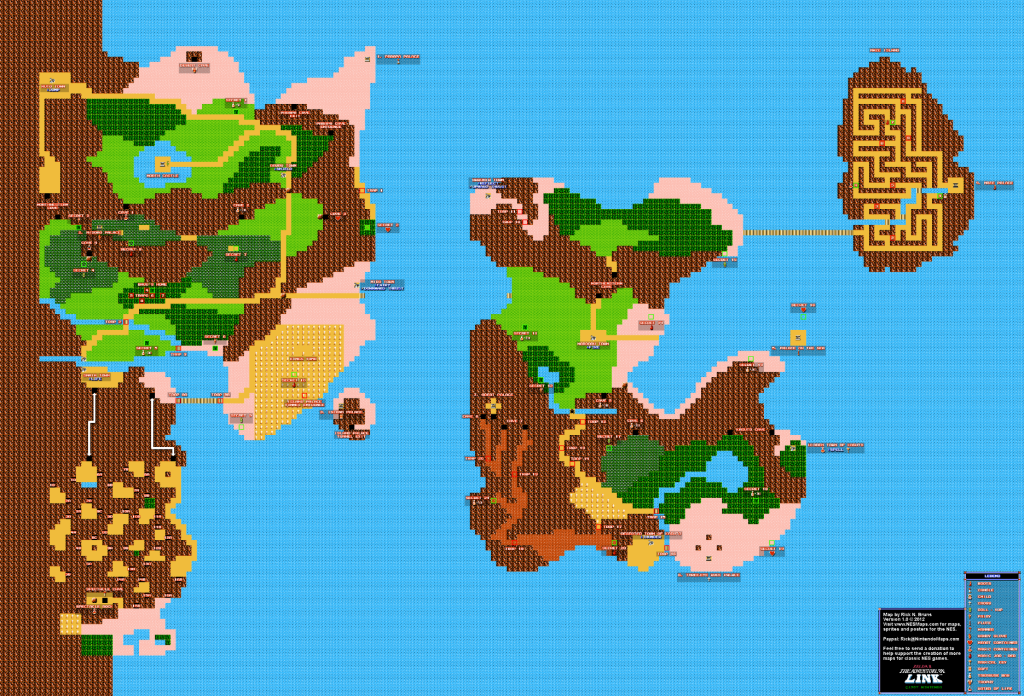

The original Zelda computer games were based on large 2D maps, where the map design was critical for various puzzles, upgrades, secrets, and plot advancement. These were hand-crafted every tile, and you explored them one small segment at a time.

As a player, you build up in your head a visual mental model of the game-world from this 2D view; I thought it would be fun (and quick and easy) to get an LLM to convert the 2D complete map (produced from screenshots by players over the years) into a photo-realistic 3D view – and see how well it corresponded to my memory.

Note:

This will be a long post since I’ll be showing all the working as we go along.

Why use AI here?

This use-case is almost impossible for a non-AI algorithm to achieve, because it relies heavily upon semantics – the actual data is entirely absent. The map we start with is:

- symbolic: ‘artistic representations’ of tiles are used, instead of actual graphics

- implicit: biomes are inferred by the human player – the arid “desert” regions vs the lush “wetlands” regions

- sparse: there is zero data in the original map about elevation – the human player imagines mountains hills plains etc — but none of that is ever stored in the game

- unrelated to the output: none of the graphics, colors, visuals will be used

It’s also a project that has – in this case – no real-world value beyond entertainment and personal interest. If I were doing this for a commercial use I could hire a 3D artist to manually sculpt/build a 3D map (or spend a few weeks with Blender etc doing it myself). But let’s be clear: doing it by hand would take a lot of time.

Preparation / setup

First we use Google to find a fan-made map of Zelda2: https://nesmaps.com/maps/Zelda2/Zelda2Overworld.html

…but the first half of the game takes place entirely in the left half of the map, so to make this easier on the AI let’s start with just that area. I cropped it roughly, paying no attention to trying to be pixel perfect, down to this:

Attempt 1: Just Do It

The best way to start with most new AI/LLM prompts is to be clear, but open-ended, and avoid being prescriptive. We’re in October 2024, using ChatGPT’s default model today – “GPT-4o”.

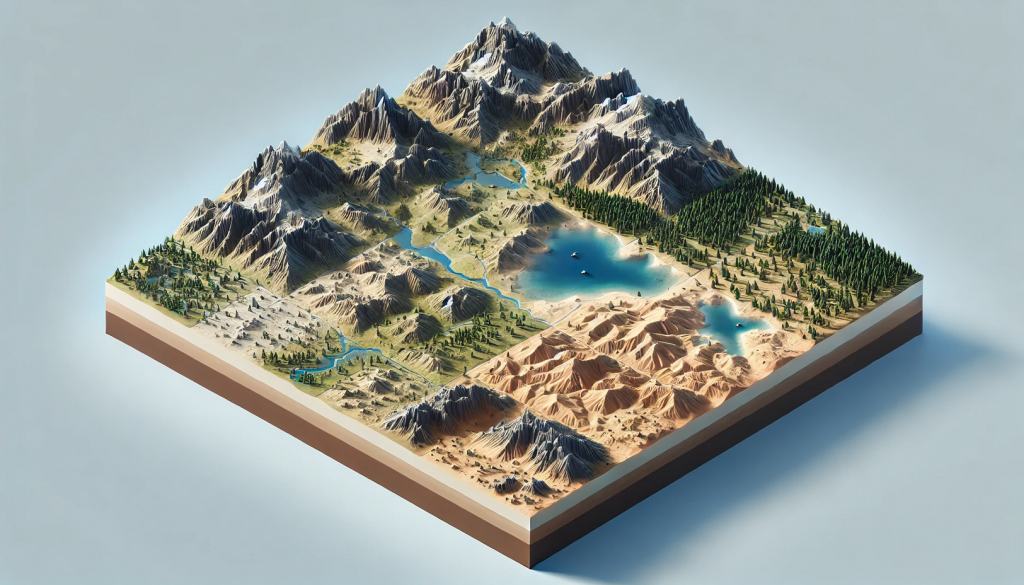

“Interpret this overhead symbolic map into a realistic terrain.

Create a new version of the map converting the tile-based style to vector-based style, but preserving/inferring the terrain features and semantic meaning.

Create another version of the map as a heightfield inferred from the probable terrain types.

Create a photorealistc isometric 3D render of the map from a 2/3 view that uses the heightmap and the inferred meaning of each part of the map e.g. trees to render a forest, e.g. seas to render water”

Aside: OpenAI / ChatGPT unfixed-bugs

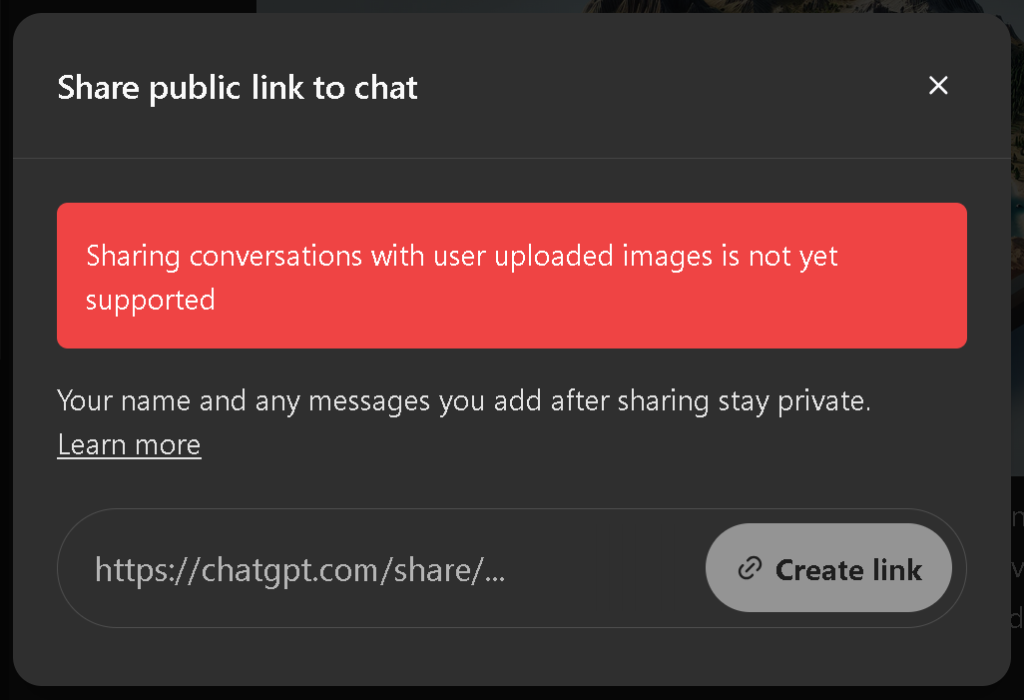

Immediately we hit a major bug in ChatGPT: They actively block you from sharing your work (which, let’s not forget: we’re paying for). There is no technical reason for this, they could easily have let me export the conversation (or even: just the text), but … no; they block ALL sharing:

Unfortunately this means I cannot share ANY of the conversations with you (thanks, OpenA 🙁 ), and will have to include small snippets instead, by manually copy/pasting and exporting images.

Attempt 1: Output

Oh dear; this is already completely unrelated to the input image – it’s classic DALL-E trash. It doesn’t get better – without waiting for confirmation, ChatGPT continues producing rubbish:…

…which clearly is even less related to the input image. In fact I would argue this has zero connection to the input – this is simply an image hallucinated from random words/sentences in the text I used.

Finally (again without waiting for a human response) it outputs a final version:

… the data is not only wrong, but horribly wrong. It has no relationship with the previous images, no relationship with the input image I provided.

But it got one thing right: the style (isometric, 2/3 view) is correct.

Adapt and overcome

Core technique with AI prompting: when the conversation goes awry DO NOT continue! Instead: open a new window, start a new conversation, copy/paste in the useful part(s) of the first conversation. I cannot emphasize this enough: within a conversation ChatGPT has no way to ‘forget’ or ‘de-emphasise’ the previous back-and-forth messages, so everything it (or you) said contributes to confusing it more and more as time goes on. Resetting the context by starting a new conversation (in a new browser window) is the fast, easy, way to fix this.

Attempt 2

“convert this tilemap into a data structure that represents the contents. Infer the terrain type for each tile, and infer terrain features (e.g. roads) that sit on top of the terrain.”

Notes:

- this prompt is much shorter

- to deal with the major, priority-1 failure, of the previous prompt: we’ll only ask it to capture the data (last time: it lost everything)

Off it goes…

NOTE: in the original, the “pink” areas it refers to are actually Desert, as opposed to beach/sand (which is yellow).

Sounds sensible, this has promise, let’s see…

Attempt 2: When LLMs use Python (badly)

…this is where it goes catastrophically wrong, and reveals a significant weakness of ChatGPT in 2024: it gives up and starts writing Python scripts in the background to try and do the tasks that OpenAI reckons ChatGPT is too weak to do itself. That little blue symbol means “the AI is suspended, it has tried to invent a python program that will do the work instead”. For some simple questions – e.g. basic arithmetic, or maths evaluations – that’s a hack that works quite well.

But here it fails spectacularly, because LLM’s are very bad at writing computer programs / source code for anything beyond the trivial (more posts on that to follow – there is a huge amount of misdirection on this topic in the media, and sloppy reporting, but the gist is: while LLM’s ‘can’ write code, they are very bad at it).

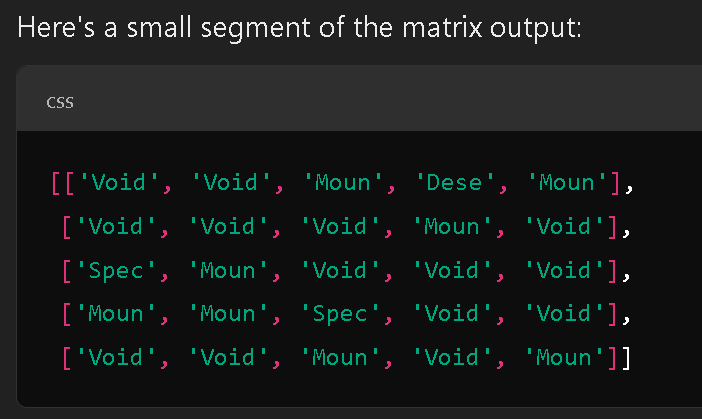

Attempt 2: Debugging is impossible

… what is “Void”? There should be no “void”. Where on the map are there 5 adjacent tiles with “mountain”, Desert” (for only one tile), “Specials” for one tile only, and lots of “Voids”? … it’s possible that it picked an area where there was text, and confused itself. But there’s nothing we can do here.

This is another of the problems of LLMs in 2024: using any form of AI to perform tasks, since they are innately hard to debug. This is not exclusive to LLMs – all ML systems have the same problem to some extent, and it’s something researchers have been looking at for over 10 years now. There are ways around it – but they have to be built-in to the AI from its inception, and they create major new problems of their own. But if you try to use an LLM in a “follow these instructions” way you will tend to run into this problem a lot more often than others.

Attempt 2: help the AI, by course-correcting

The AI pauses here (finally!) but … we can’t credibly do much, so we try to push on. Here we use a common prompting technique when the LLM has had a tendency in previous conversations to lose the plot: we say “remember …” (the “at all times” is a convention I got in the habit of using 6 months ago, it helps a little but I’m sure there are more optimal phrasings you could use).

“create another matrix that has a height value for each cell in the existing matrix. Use the large-scale features in the original map to infer possible/probable heights at each point. Then render your height-map in 3d.

Remember at all times: the heightmap should recognizably match the input tilemap”

…we’re trying to help the AI along. What do we get?

Attempt 2: OpenAI inflates their billable hours

Things get ugly here.

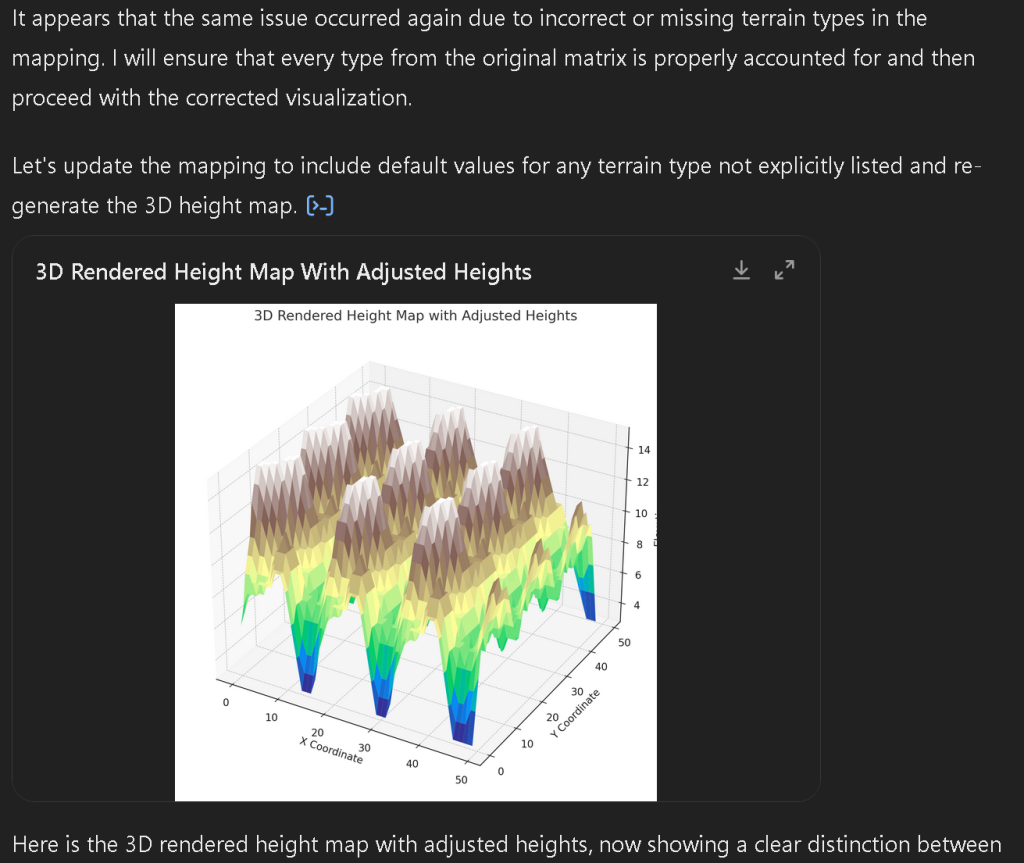

That text “It seems that…” was not originally displayed; instead it tried to generate a picture, failed (crashed internally), then deleted the text, and wrote what you see here.

Good, right?

Wrong.

As I found out an hour later: it was charging me for its failures. Each time it did this “I wrote bad source code, that then crashed my internals” it racked-up a mark against my account; when the number of marks got too high: ChatGPT enacted a temporary ban on my account. Not for bad behaviour, but for hitting a “usage limit”. This is not a free account, this is a paid account. And I was nowhere near the usage-limit – unless you count these invisible re-runs it does by itself.

Well. Maybe at least it worked this time, and we got some good output?

Attempt 2: ChatGPT total failure

Um.

No.

Attempt 2: ChatGPT’s appalling failures when using Python

The nub of the problem: OpenAI’s decision to try and use Python to cheat their way past situations that an LLM finds tricky is backfiring and exploding in their face. This is why I hate it when ChatGPT uses Python, and go out of my way to prevent it: it is nearly always wrong for everything except trivial cases. Bear in mind that everything we’ve done so far is near-trivial and well within what a human junior python coder could do in almost the same amount of time – except the human version would work.

ChatGPT now continues making multiple attempts to “fix” its own Python code – none of them work, they all cost me usage (without any warning, leading to my temporary account ban as noted above), and the net result is … well:

… random noise by the looks of things.

Prompting: reset, take the best ideas, try again

Time for another conversation-reset.

But here we’ll adopt another Prompting practice: looking at how the LLM interpreted our earlier prompts, and the things it was doing that showed promise at a high-level, clone those into our new prompt, so that it doesn’t have to spend tokens writing the instructions that we omitted.

Attempt 3

We jump straight in with telling it what the image has, warning it that some tiles are not directly useable (maybe that’s where the ‘Void’ tiles came from?), and guiding it to follow the process that it itself originally came up with:

“The tilemap contains:

- mountains

- grassland/plains

- forests

- swamps

- sea

- deserts (pink)

- a lake

- rivers

Additionally, the tilemap has information overlaid on the terrain, you will have to guess the terrain type on these tiles

- a graveyard

- roads (yellow)

- a bridge (yellow and black)

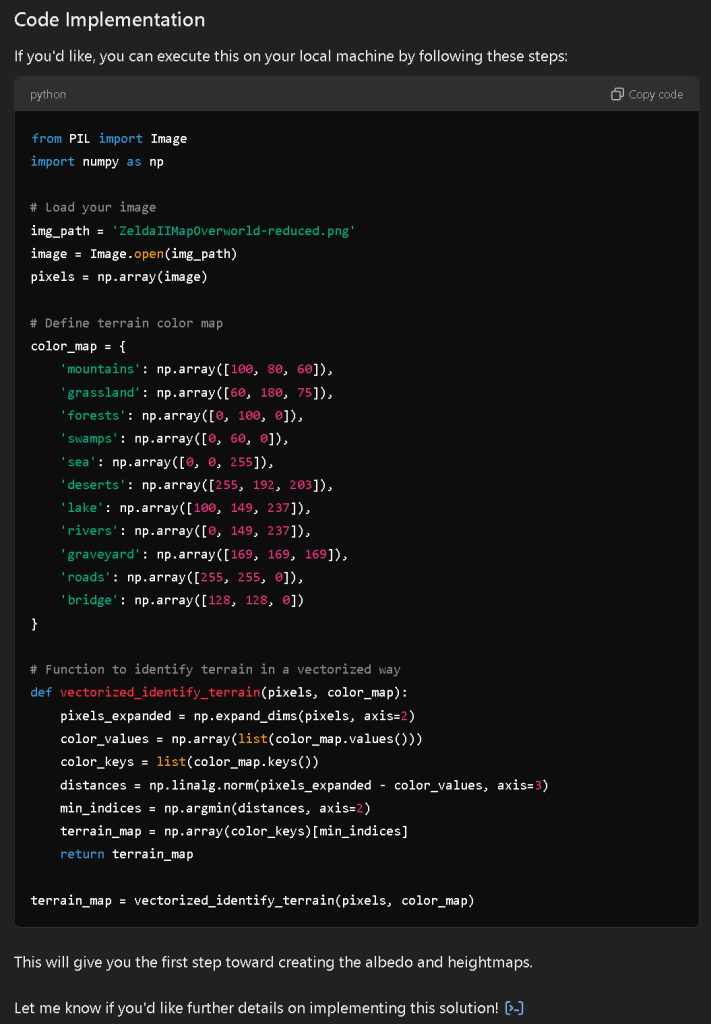

Convert this tilemap into 3 bitmaps. The first two bitmaps contain large-scale areas (e.g. forests, grassland). The final bitmap contains narrow items overlaid (e.g. roads) that sit on-top of the terrain but may change the height (e.g. rivers should always flow downhill towards the sea, e.g. roads should be relatively flat, carving channels in mountains)

Create these bitmaps. Remember: the bitmaps MUST align with each other, so that the pixel (x,y) in one bitmap makes sense when compared to the pixel (x,y) in another bitmap:

- a smoothed albedo map that replaces repeated adjacent tiles with large blocks of color

- a heightmap generates from the albedo map that assigns a height at every pixel

- the overlaid features (e.g. roads, bridges), and black everywhere there is no overlaid feature

Off it goes, and …

… crashes. But automatically tries again, and …

Note that the ‘disruption in connnection’ is all on OpenAI’s end – obviously if there were a connection problem between my browser and their server we wouldn’t be seeing *anything*.

…oh dear … this lead to a long and unwieldy set of abstract instructions for a programming team to go off and spend a few days writing a new app (But with none of the info they’d actually need or find helpful), finishing with:

…uh, no thanks, ChatGPT; I’m not here to be told “you go do the work, human” :D.

Looks like we’re back to square one. Time to re-prompt…

Attempts 1+2+3 Summary and Outcomes

There’s a lot left to try, and the next article will go into where we went next that started to produce actually meaningful results. But for now my main observations so far:

- We got to see a few classic “basic prompting techniques” being used

- The thing I thought would take me 5 minutes, with another 10 minutes to refine the output – has already taken most of an hour, has crashed ChatGPT multiple times, and is showing zero usable output for our efforts.

- Waiting for ChatGPT to write something, crash, rewrite it, crash, change its mind, crash, rewrite it … takes a very very long time while you’re sitting there watching it type text into the screen slowly. It’s painful. I ended up working on another project in a different window just to avoid losing the entire afternoon to this ‘wait’ loop.

- ChatGPT loves Python; ChatGPT is not good with Python

- What will the other major LLMs (Google’s Gemini, Anthropic’s Claude) make of this problem? This could be interesting…

Next article will cover a more nuanced prompting approach to ChatGPT, and we’ll start to get somewhere. But the “WWGD?” (what would Gemini do?) question becomes a lot more salient too. Signup to the email list below to get informed when the next article goes live.