Summary

I used AI to write my new app. Great, but the AI kept crashing when it hadn't quite finished the work. And you can't resume halfway. What to do?

Anthropic (OpenAI’s rival) has been having a lot of problems recently – their servers keep crashing, their web interface keeps popping-up messages saying they are “downgrading all users” due to performance problems on their servers, features keep being remotely disabled by Anthropic, etc. The guys are having a hard time. But a deeply problematic side-effect for me is that I was in the middle of writing a new app using the LLM to write the code.

So … one single (but important!) LLM conversation I was trying to have keeps being ‘truncated’ (i.e. cut-off before it’s finished) by the Claude (the competitor to ChatGPT) servers. Because this isn’t a single file, but many of them – and I don’t know how they fit together – I can’t fix them. I would have to rewrite them myself (to a higher quality than the LLM, sure, but – the point is to spare me from that. Otherwise there’s little or no point using the AI for coding at all!)

You cannot simply “resume” a conversation when the conversation was generating so much output – the files generated so far will be incompatible with the ones generated later (as of November 2024 no commercial LLMs are that predictable when it comes to writing code – unless you force them to jump through hoops). I could have tried writing a new app … to let me directly connect to the LLM API … giving me access to the LLM’s Temperature control (which can usually – but not always – reduce randomness). But that would have taken so much time that, again, it would overall be quicker to simply write the main app myself.

So … what are we to do? This post goes through my solution – which ultimately worked.

1. We need more context: use Claude ‘Projects’

The first 3-4 attempts had each time generated between 5 and 20 files before Claude servers crashed (nothing to do with me!). Claude has a maximum of 5 files you can upload to a chat. Helpfully it suggests you ‘use a project’ to upload more files; unhelpfully (guys: you should fix this) the button for ‘select a project’ has no option for ‘new project’ – so you have to open a new browser window to claude.ai, login, open the sidebar, click on Projects, then create a new project.

I uploaded the 15-20 ‘best’ source files from a single previous conversation (I picked the conversation where the code seemed the most organized / least spammy).

2. Claude is largely useless at fixing code itself

This was a tiny project – one of the smallest projects you could possibly write – just a node.js backend with a few database records, and a react frontend with a handful of pages to display what’s in the database. And yet: as of November 2024: still much too complicated for a top-tier LLM to handle.

I tried getting Claude to add the missing files (that had never been generated yet), and then I intended to run new conversations to patch-up the gaps (e.g. where a missing-file that was created ended up using slightly different filenames to the originals – despite having them available – because this is a recurring problem Claude has).

This failed. Over and over again. For hours. Claude went round and round in circles, each fix broke the code it had just fixed minutes previously.

NOTE: this is despite me already ensuring that the average file length was 60-80 lines – extremely short! – to workaround Claude’s long-term failings in dealing with normal-sized source files.

After a couple of hours I gave up and tried something new.

3. Embedding a self-test routine into the code that Claude writes

I absolutely did not have time or energy for adding formal ‘unit tests’ to the project. It would potentially take me less time to write everything myself than hand-hold an LLM through the process of deciding on tests, installing a test framework, uninstalling it and replacing it with a new one when Claude says ‘Oops! Yes, that test framework is no longer supported, I shouldn’t have used it’ (Claude frequently does this when working with Javascript projects: the world of Javascript programming is the opposite of well-written libraries and frameworks, and even AI can’t handle the horror of it all), etc.

Unit-tests could be very useful – WITHOUT a framework (it would be foolish to add a framework: the point here is a system-test for ‘does this project even compile/build/run?’) – but only after the code has been written. And my problem so far has been getting the LLM to ‘finish what it started’ and write the missing code.

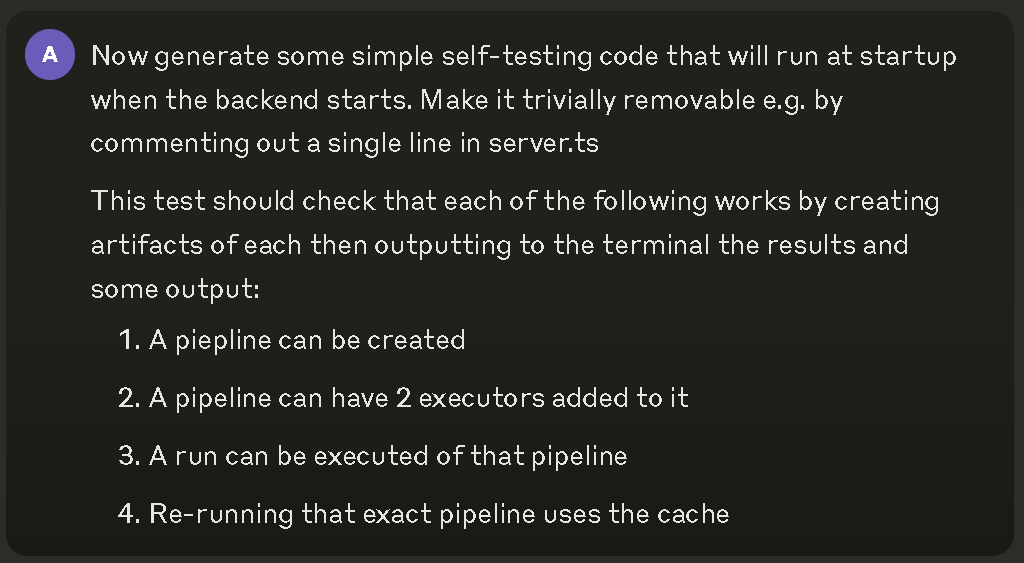

So I looked back at the specification – that I’d given to Claude explicitly, as a standalone file – and did a mental process of “what are the 3 main things, theoretically, that this project is trying to achieve?”. And told it to write that as a mini ‘test’ that it would build-in to the core application on startup.

My thoughts here were:

- Force the LLM to write “testing” inline with “the main app code” – so that it was more likely to notice / discover issues in its own code and correct them in-passing (this happened a few times both successfully and unsuccessfully; the unsucessful time I was able to correct automatically).

- Force the LLM to output directly to the commandline / terminal immediately at startup. This was selfish: I wanted to see instantly if the app was ‘probably working’, not have to manually test it. Based on many years of experience writing server apps I’ve learnt there’s huge value in having the app drop just a little bit of basic self-testing on startup (can I find my config file? is there any data in it? which port(s) am I opening – and did they work? etc). But it worked out to be an extra smart move in the end (see below).

- Prevent the LLM from trying to use a testing framework – that would add dozens more files, when it’s already failing to generate just 5 missing files. Trying to generate TWENTY-five would be guaranteed failure, its context is already frequently collapsing.

- Personally choose ‘what really matters’ and use as few words as possible. I’ve noticed many times over the thousands of prompts I’ve written that the more words you use in describing something the lower quality the output. (NB: despite the fact that the longer the prompt, the better the output – the key is to ‘cover more ground, but avoid over-specifying all the details’).

Here’s the prompt I used:

4. Feed-in the outputs from the console

Each time I ran the app, it would crash with some output.

I copy/pasted from the start of the self-test outputs to the first point that crashed, and included just enough of the filenames/line numbers that in theory the LLM could figure out what had gone wrong. I gave no information, no help – note: I didn’t even say “this failed”, I just copy/pasted the facts of the crash, and let it it infer that something had failed.

NB: when an app doesn’t even startup the crashes (in any language, but especially when doing work with web browsers) often generate false/incorrect error messages. I had no intention of reading / checking the line numbers myself. But I figured I’d give the LLM a chance, and see what it did with it.

Ultimately this worked extremely well, it kept using the outputs as the corrections, and with me funnelling the changes back and forth it eventually fixed the project.

I had to do a little more intelligent work along the way – when it started generating a huge chunk of SQL and trying to insert it into the server’s 3-line startup routine (!) I could see something had gone wrong – a quick search of the codebase found it was duplicating code that had its own source file already, so I simply hit the STOP button and then ‘gently reminded’ it that it already had that source and which file it was in.

My full chat-log with Anthropic/Claude LLM

Here it is: https://claude.ai/chat/267dd40f-19d1-41a3-8f3e-51543dacdea6

Oh! Except! … Anthropic’s facepalm backwards corporate policy is that: no-one is ever allowed to share with other humans. So … you can’t see it.

I’ll leave the link there in case they fix/change this at some point, or in case I find a way to share that is so far eluding me (couldn’t find anything in the UI or in their docs just now).