“Those who can … write software; those who can’t … use genAI” — but the ones using AI are achieving 10x outcomes with 1/10x effort. When it comes to genAI some engineering teams are being left behind by their non-technical co-workers. How do we fix that? Step 1: get serious about Prompt writing.

“Prompt Engineering” (which devalues the word ‘engineering’, probably why tech teams don’t embrace it) is surprisingly underrated by mainstream tech industry, who are chasing custom-LLMs and LLM-tuning (why? So expensive! So little benefit!).

Instead it’s being heavily (HEAVILY) used by non-technical departments: marketing, sales, operations, customer service, finance, etc. As per the Autumn 2023 survey by Salesforce: 40% of employees are using GenerativeAI despite it being banned by their CISO/CIO/CTO.

But: Non-technical departments don’t know how to do good Engineering, or Data Science, practice – if they had the wisdom and involvement of their colleagues here they’d probably perform even better. As an Engineer you first always want: great tools. So let’s look at what exists today (April 2024) for supporting good practice in Prompt writing (aka ‘prompt engineering’).

Context

I’ve been using LLMs since the start of 2023, both in product development / building new AI-powered tech, and as personal assistants to improve my daily workflows. I’m doing multiple new AI experiments each week, from an end-user perspective, and I want a way to track, manage, version, test, analyse, and report on the good, bad, etc. i.e. make it more ‘engineering’ and less ‘chaotic mess’.

What we want/need (ELEMENTARY):

- Integrations: works (at least!) with OpenAI/ChatGPT, but most people want to try others (e.g. in 2024: Claude/Opus) – does the tool let you choose which you’re using today?

- History: can I tinker with a prompt, make lots of changes, then reject the bad outcomes and ‘go back to the version that worked really well yesterday’?

- Security/API keys: if it doesn’t use an API key then it’s (default) stealing your data – and whatever features you’ve already paid OpenAI/Anthropic/Mistral $$$ for will NOT be available to you

- Security/Legals: basic stuff, but … do they store (and read/steal) your data? Can you prevent them using it in their training? Or do they merely provide a conduit (passing it through, only providing the code)?

- GUI: OpenAI set the benchmark with their free, fast, decent-but-basic chatbot UI. Working without this – or something at least as good – would be a step backwards; putting any extra steps at all between “I just had an idea of something I want to try with an LLM!” and the moment you get the results back … is a bad idea

NOTE: all the above are beyond basic, I didn’t expect to have to write them down; they’re things that OpenAI did out of the box when they launched – if a service is doing less than that then it’s not even hit baseline.

What we want/need (BASIC):

- Version control/diffs: history is great, but … can we rapidly compare-and-contrast different versions of the same prompt, the same outputs?

- Search/Labelling: Can we label prompts and responses, categorise them, score them? So they’re easier to find later, or share with colleagues

- Templates/Variables: Can I write a generic prompt with placeholders for key words/phrases, then re-use it later for a similar-but-different problem, replacing only the placeholders?

- Speed/Performance: LLMs are slow; if the tool is slower than the LLM then that’s downright embarassing, but also a big waste of time and roadblock on your own productivity (LLMs are already slooooooow)

- Price: Free? Purchased? SaaS? All are good options, but OpenAI charges a paltry $20/month, so… any $ costs need to be extremely well justified

What we want/need (ADVANCED):

- … 2024 tools haven’t got this far, so this is ‘wishlist’ territory…

- TDD/BDD: (probably, but depends on design/implementation)

- A/B Testing: (not convinced; touted by some providers, but few users seem to care – I haven’t seen an implementation yet that made me think ‘yes, I want that’)

First cohort

TL;TL;DR

- Worth a play:

- TypingMind works, organizes your conversations, has no free version ($50 one-time purchase), has some strong features (e.g. live preview of how many $$$ you’re spending as you type)

- PromptRefine works, is very clear/non-confusing interface, but the paid version is pricey

- Vidura is goofy and colorful, works fine – but are they sucking up your data? Probably

- PromptPerfect tries to do the PromptEngineering for you, using the LLM to … think of a better thing to say to … the LLM. Fun! Worth a tinker.

- Untested:

- Vaporware, but watch this space:

- Not impressed:

- PromptTools (HegalAI) – requires coding/Python install; both web versions crash out of the box

- Microsoft …. Prompt Flow – if you need 6 words just to name your product … it might be a bit too unwieldy for day to day use

- PromptEch – what if a Kanban board had ‘relations’ with a Chatbot? What unholy progeny would emerge? I tried it, but … I say: “Run away!”

- Not worth a detailed review:

- https://prompt.studio/ – requires access to your Google account (for no apparent reason).

- https://www.zenprompts.ai/ – no meaningful or relevant features listed on their site

- https://github.com/mshumer/gpt-prompt-engineer – nothing wrong, just it’s command-line only and it’s a micro-tool that only does one basic thing. But I do like the ELO ranking system it uses for rating prompts! See this image…

- Abandonware, ignore:

- Promptly, PRST, AI Prompt Manager – not even real products / dead already

Selection Criteria

I cast the net as wide as possible (Google, ML/AI colleagues, LLM user-groups, mailing lists, etc) to find everything that claims to be a tool for aiding “Prompt Management”.

Some tools are purely about Prompts, others are “everything including the kitchen sink” which includes Prompts; I tried to cover both, but for the fat/monolith tools ignoring all their other (possibly great) features and only looking at the Prompt parts.

If you have one in spring 2024 that’s you love (or you’re building) and I missed it – please contact me and I’ll have a look and (time permitting) add it too.

Products / Reviews

… in no particular order …

PromptPerfect – https://promptperfect.jina.ai/

TL;DR

- Pure “prompt management” tool (BUT not quite)

- …instead it has 1-trick: does something unique: “prompt re-writing / optimization”

Issues

Features

- Only one: auto copy pastes “Please improve this prompt” into your LLM/chatbot

Product Experience

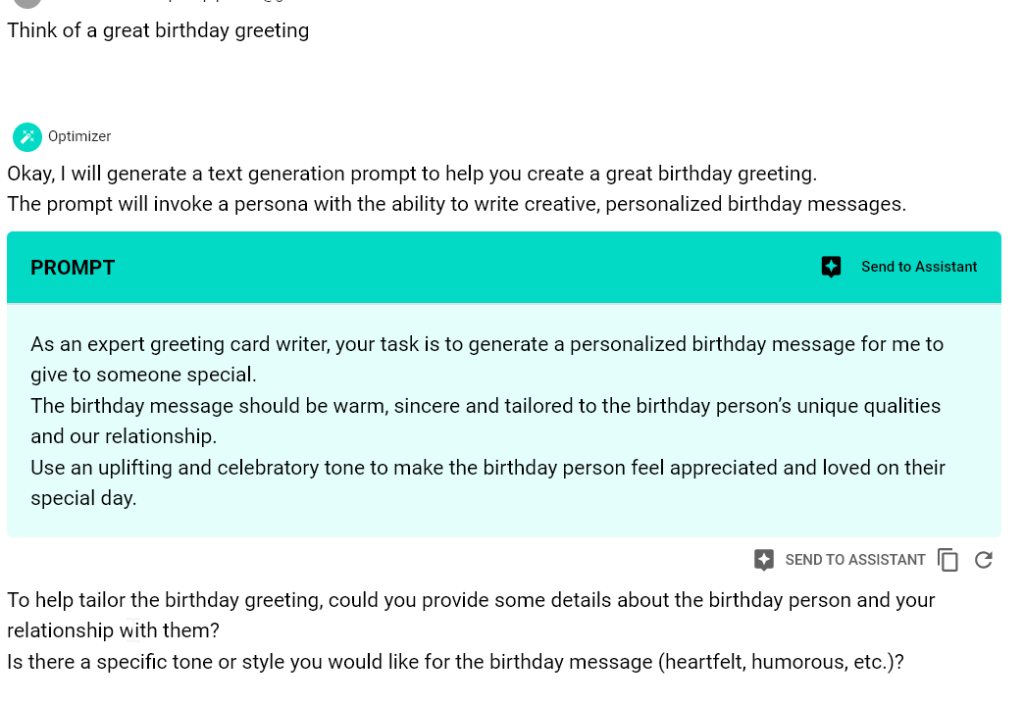

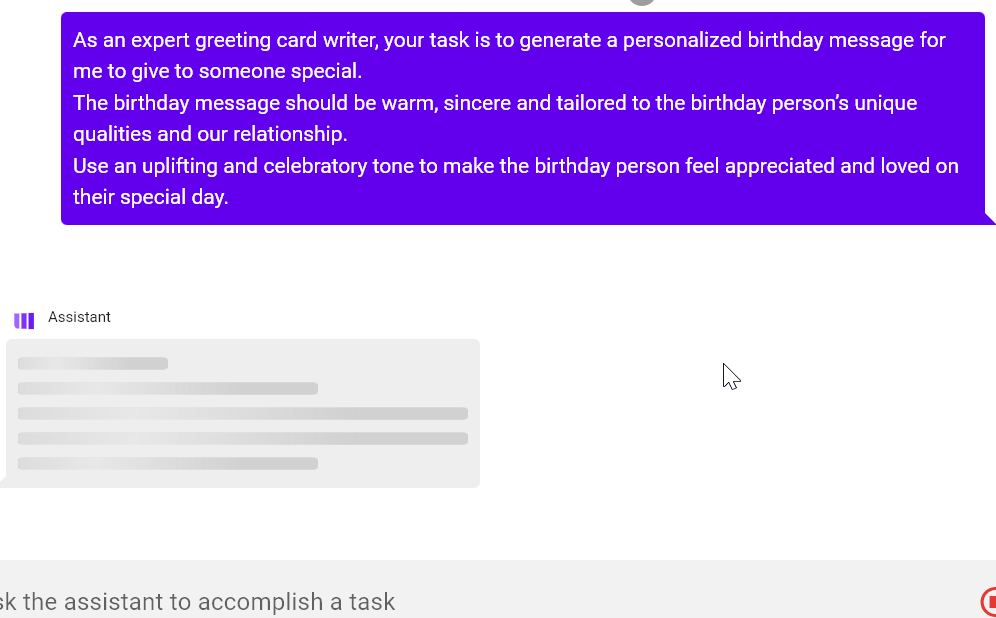

They’re focussed on their 1 feature, so … they have a forced ‘tutorial’ with non-skippable dialog boxes that appear on random places on the screen to prevent you escaping them quickly. But OK, get past that, and let’s play with this thing…

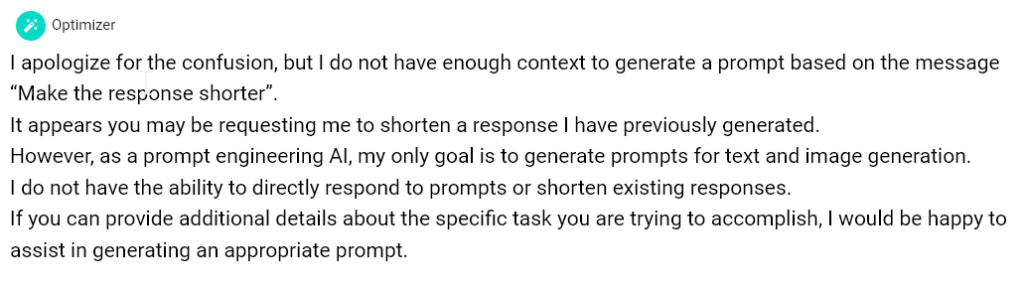

Unfortunately the core UX is pretty bad – it splits the screen in half, a “conversation with the ChatGPT/etc” and a “optimizer to improve the prompts you use on the other half” – so I expected to do this:

- Write a prompt in left hand side (LHS)

- See the result; if I don’t like it, click in right hand side (RHS) and optimize it

But that gets you this:

…turns out you have to ignore the big, obvious, UI, and instead use the small subtle INVISIBLE BUTTON that only appears if you mouse-over the magic pixels where it’s hiding. This is the button you have to click:

Can you see it? No? Why not, it’s SO OBVIOUS /s. Here’s that image again, but if you know the magic pixel and move your mouse over it:

LOL. Anyway, apart from some UX stumbles, functionally it’s fine. Pressing the magic button makes this appear on the RHS:

…hit that “SEND TO ASSISTANT” button, and it automatically sends the query across to the LHS. Annoyingly, it trashes your clipboard at this point (minor, but why did they do it? Are they using clipboard to transfer between windows – that’s not great if so).

Amusingly, while ChatGPT was happy to answer my ‘unoptimized’ query, it rejected the ‘optimized’ query demanding more information:

So. It’s a neat gimmick. But … do we need it?

Honestly?

No.

This is what you/I is doing (and doing it many many times better than PromptPerfect’s LLMs) when we embark on a “Prompt Engineering” exercise. My custom prompts aren’t even in the same ballpark as PromptPerfect’s.

But … worth watching; over time it could get better (if it gets better than you and I, then we’re really in trouble – won’t be much left for us to do! – or alternatively: we’ll all be retired at age 20, happily sitting on a beach all day long).

PromptRefine – https://www.promptrefine.com/

TL;DR

- Diversified, ‘many tools’ product, one of which is a “prompt management” tool

- Pure “prompt management” tool

- VERY clean/simple/direct UI

- Uses WindowAI for managing your API keys (novel!)

Issues

- Expensive for what it is

Features

- Templates/Variables in prompts? YES

- Diff? YES

- Supports API keys for LLM provider? YES (special: windowAI)

- Sensible Legal/Data/Security policies? YES (special: windowAI)

Product Experience

It’s very basic – but I like it. It Just Works, it’s simple and fast (although it’s a bit too lean on features).

I have to highlight too: it uses a radically different system for API-key management – https://windowai.io/ (https://twitter.com/xanderatallah/status/1643356106670981122) – where the keys are never stored in the app itself, they’re stored by each individual that accesses the system, in their own (personal, local) web browser. Interesting!

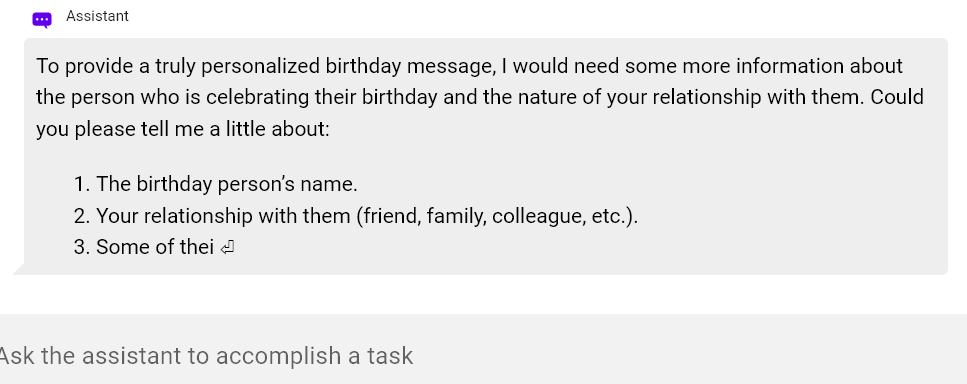

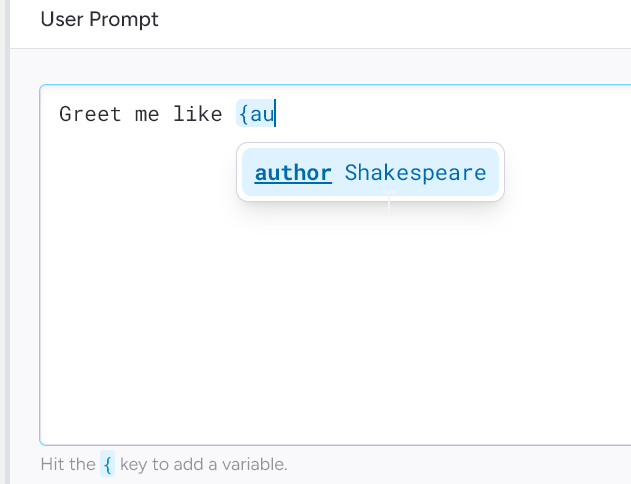

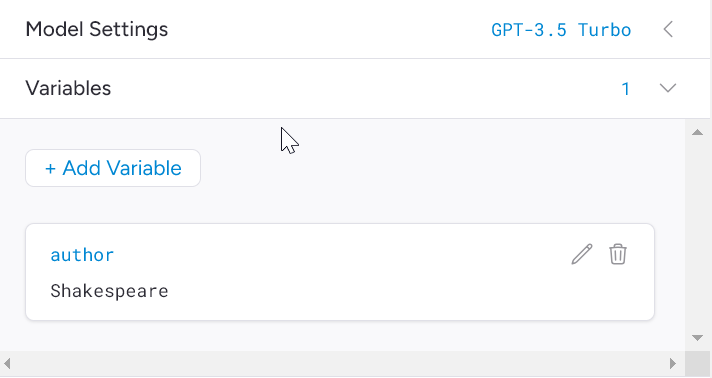

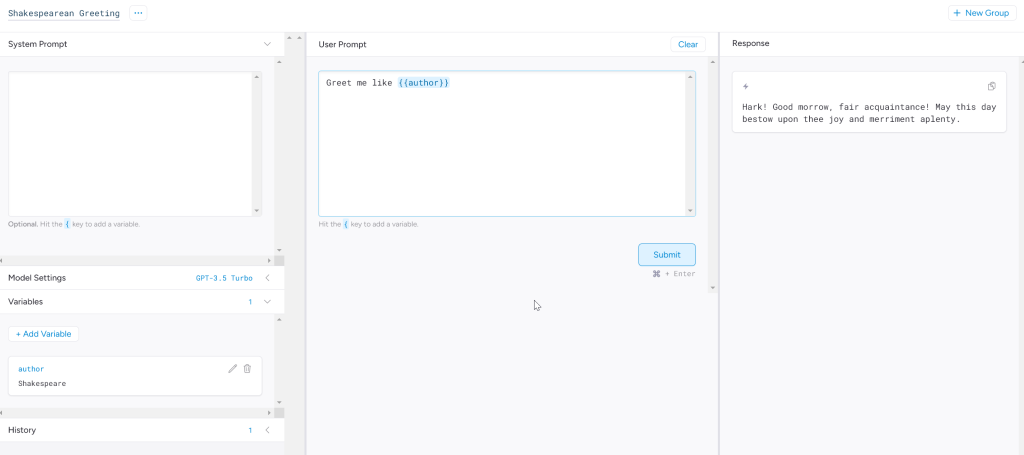

Feature: Templates/variables

Templates/variables worked out of the box, with clear affordances and simple interface:

…and behold! It works (big image, open it in a new tab):

That shouldn’t be a notable win – but compared to how many of them have non-working / half-working implementations of this feature, it is.

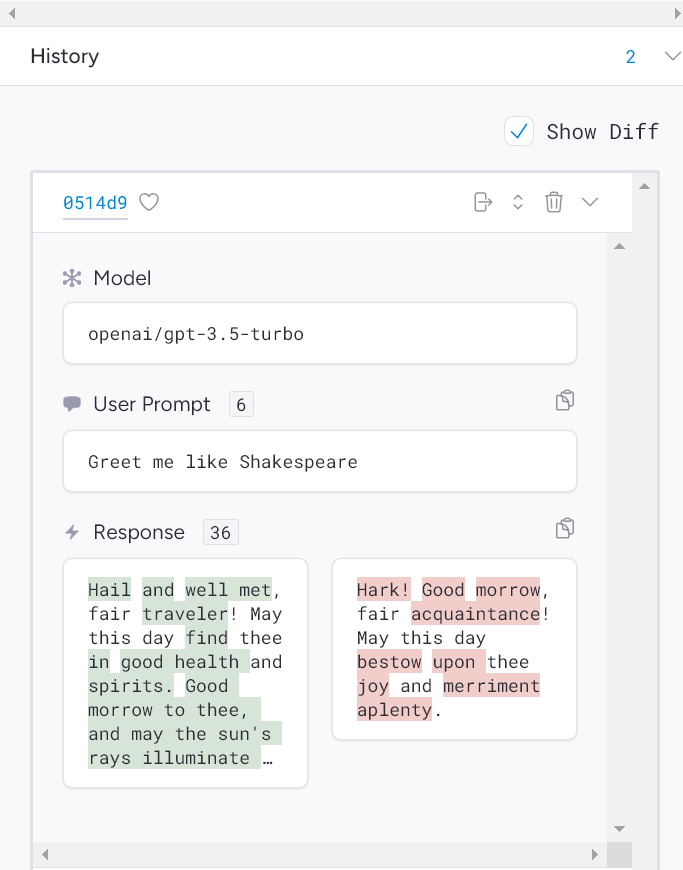

Feature: Diffs / Version Control

In the bottom left it has a modern color-coded Diff system for seeing how the output of a run differs over time – useful when playing with Temperature, or trying to understand the limitations of a given Prompt:

Pricing

This is … steep. I’m not sure how they’re justifying it, given their tool doesn’t do much – this is where the simplicity falls down. Compare it to TypingMind (which does a little less but a whole lot more) and note this cost here is monthly – TypingMind is only 20% more for a one-off lifetime fee. And if you buy the “Plus” package … that’s more than a YEAR of your OpenAI subscription! For a tool that just provides some nice-to-haves! Ouch.

LLMStudio – https://github.com/TensorOpsAI/LLMStudio

TL;DR

- Diversified, ‘many tools’ product, one of which is a “prompt management” tool

- Python-based tool: you’ll need a local install of Python first

- Aimed explicitly at engineers, you’re expected to write code to use it

Issues

- The “Quickstart” doc is literally just a source code folder; um, great!

Features

- Token cost previews? YES (UNTESTED)

- Supports API keys for LLM provider? YES

- Sensible Legal/Data/Security policies? YES

Product Experience

I’m not ready to install and maintain an entire Python ecosystem yet, just to try out a tool that’s clearly inferior to what else is out there, and there was no other way to use this, so I’m postponing playing with it for now.

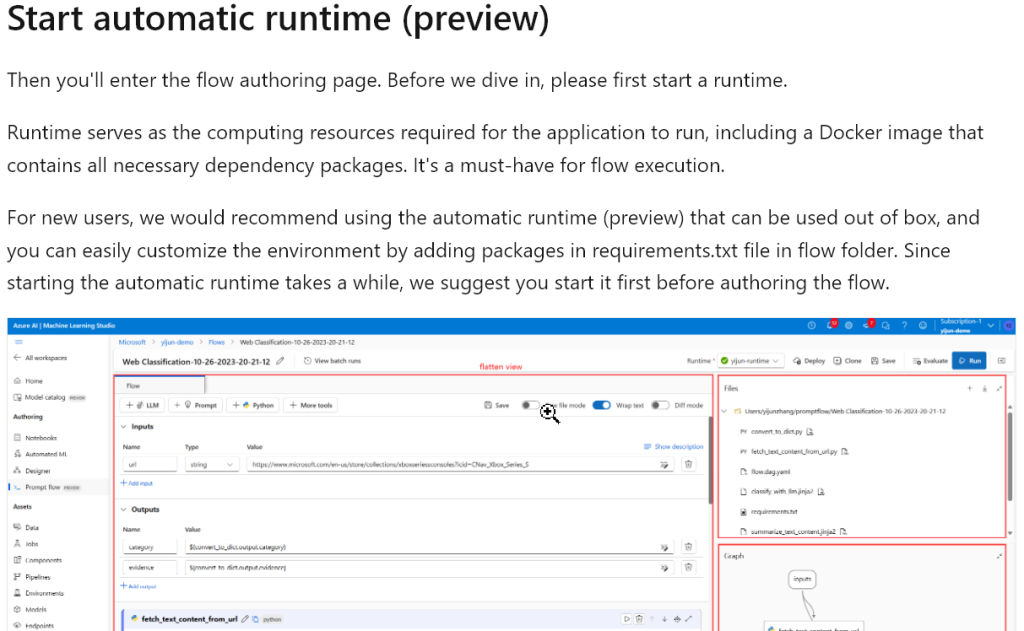

Microsoft Azure Machine Learning Prompt Flow – [too long URL]

TL;DR

- I almost died from the Business Buzzword Bullsht Bingo

- Diversified, ‘many tools’ product, one of which is a “prompt management” tool

- Life is too short; this is massive overkill (and very long setup cost) for Prompt work

Issues

- N/A – I didn’t try it

Features

- It’s Microsoft, the owners of OpenAI – you can bet it’ll be well maintained for a long time to come

- It’s Microsoft, the owners of Azure – if you’re an Azure user, you may well find this is quick/easy to setup (because you’re already using similar systems from MS)

- Do you like coding? Because you’ll be coding (in a proprietary flowchart system, and with a secondary data system, and a tertiary source code system)

Product Experience

“Facilitating … the … leveraging … the … agility … iterative refinement … the … process” (all real quotes). I fell asleep, fell off my chair. I think I may have hit my head, might be a concussion. I’m in good company with whoever wrote the copy for this product.

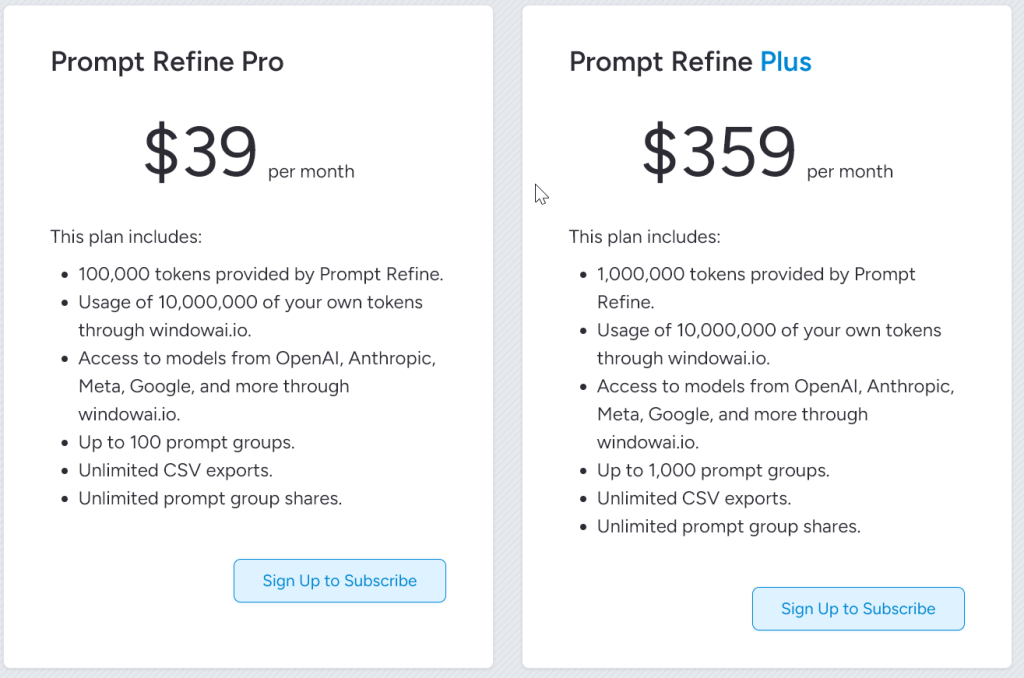

One look at the ‘prerequisites’ (before even ‘setup’, which is a long page in its own right) suggests you don’t have the time for this, unless you’re already an active Azure developer:

It gets worse (this is several pages of setup instructions later, and still nothing you can use yet):

Eventually you learn that:

- You have to write a program … to start an LLM

- You have to write another program … to be able to use the LLM

- Eventually you get to write a prompt … and embed it inside your programs

I’m sure it’s a lot of fun if you want to noodle around with LLMs, or build a complex custom backend; it’s not much use if you have work to do and want to use genAI to do it.

PezzoLabs – https://github.com/pezzolabs/pezzo

TL;DR

- Diversified, ‘many tools’ product, one of which is a “prompt management” tool

- Aimed explicitly at engineers, you’re expected to write code to use it

- UI for Prompt Management is the 4th of their 6 primary screenshots; looks good, but it’s clearly not a primary feature for them

Issues

- Requires you to manually install it as a full-fat complex system in your local machine, run some scripts by hand, etc. Not (currently) available as a 0-shot app install (or Docker container).

Features

- ?

Product Experience

NOT TESTED YET … I don’t have an available desktop-Docker setup to run this on right now.

Portkey – https://portkey.ai/

TL;DR

- Gmail user? BANNED! [Founders report: too many bots / fake signups, so they block-banned mainstream email services]

- ? TBC – Diversified, ‘many tools’ product, one of which is a “prompt management” tool

- One of the few professional / enterprise ready products in the roundup

- Free version fine for individual use (300 requests/day), team version is $100+/month

- Outputs are deleted aggressively, you’ll have to store them (github etc) yourself if you need them

Issues

- Pricing is HIGH: minimum spend is 5x more than the cost of the LLM/GenAI model

- On the free plan you can’t retry requests (which is needed often when LLM providers do unplanned rate-limiting)

- Outputs are deleted on 30-day rolling basis: save them manually or lose them

Features

- Templates/Variables in prompts? YES

- Supports API keys for LLM provider? YES

- Sensible Legal/Data/Security policies? YES

- Support? Fast (probably)

Product Experience

Reached out to them about the “gmail accounts are banned issue” and got an almost immediate friendly detailed reply from one of the Founders. That typically implies a fast customer-support turnaround.

Pay-for service, so let’s start with the https://portkey.ai/pricing page…

[img: $0/ $99]

Pricing Conclusion: free version is probably OK, except that losing the output every 7 days is painful. Even at $150/month you’re losing almost all your data (the outputs!) unless you manually save it – which is already free from OpenAI, and is part of the core tool / service we were trying to pay for in the first place.

Security/Data integrity: they work by taking your API key and acting as your account on each service (this is the minimum standard I expected of all of them: utilises whatever pay-for LLM access I already have, and ensures that data integrity is under my explicit control since it’s whatever I agreed with the LLM provider): https://docs.portkey.ai/docs/product/ai-gateway-streamline-llm-integrations/virtual-keys

“Your API keys are encrypted and stored in secure vaults, accessible only at the moment of a request. Decryption is performed exclusively in isolated workers and only when necessary, ensuring the highest level of data security.”

The product is clearly engineer/coding-centric, but comes with an online SaaS UI so you can use it both ways. The part that focusses on prompting is: https://docs.portkey.ai/docs/product/prompt-library

“Versioning of Prompts

Portkey provides versioning of prompts, so any update on the saved prompt will create a new version. You can switch back to an older version anytime. This feature allows you to experiment with changes to your prompts, while always having the option to revert to a previous version if needed.”

“Prompt Templating

Portkey supports variables inside prompts to allow for prompt templating. This enables you to create dynamic prompts that can change based on the variables passed in. You can add as many variables as you need, but note that all variables should be strictly string. This powerful feature allows you to reuse and personalize your prompts for different use cases or users.”

PromptTools / HegalAI – https://github.com/hegelai/prompttools

TL;DR

- Source-code only tool (effectively)

- Python/Jupyter-based tool: you’ll need a local install of Python, or a hosted Jupyter instance

- OpenSource; has a Discord for support

- GUI exists but undocumented, and appears to be a thin wrapper on the source code (could be re-written in about half an hour using automated webpage-generation libs)

- Failed for me out-of-the-box, couldn’t get it either of their demos to work

- Weakly documented, poor onboarding

- Functionality is very simple/basic

Issues

- Requires you to install Python first, and debug that, if you’re not already developing in Python

- “Hosted” versions (their own demos) are broken: GoogleCollab crashes out-of-the-box, Streamlit hangs (waited more than 15 minutes for it to fail to respond to “Greet me like Shakespeare”)

Features

- Some basic Python library API calls that seem the same as you get in standard unit-testing libraries

- A web front-end that has no docs and seems quite buggy (c.f. 10+ minutes wait for nothing to happen)

Product Experience

It has a Jupyter Notebook … that doesn’t work.

It has a Playground (very simple GUI) … that doesn’t work.

Everything else requires you to install Python locally and then download/install this software. I assume this works fine, this is the only part that’s documented, it’s presumably the ‘real’ tool.

Here’s the GUI. How do you get variables/templates to work? No idea, it’s not described anywhere (and their requests all hang anyway).

Reading through the Python docs it has a very small number of features, the main ones being:

- “Experiments”: parameterize your Prompt and automatically run N copies of the prompt asynchronously, once for each combination of your parameters

- “Harnesses”: seems to be a basic “freeze some of the parameters, enable you to re-run later”

As far as I can tell, these are features that standard Unit-Testing libraries have had for many years, only these are … more basic. There’s presumably some convenience in here, but compared to what you’d already have if you were using off-the-shelf testing frameworks (and if you’re doing everything in code: why aren’t you using those already?) I’m not seeing it.

TypingMind – https://www.typingmind.com/

TL;DR

- One of the few professional / enterprise ready products in the roundup

- Adds some good non-basic / advanced features for prompt-engineering

- …e.g. live preview of how much this request will cost you / chat has cost so far, in tokens and $

- …e.g. split/fork a conversation, rewinding it to go in a different direction

- Has most of the core features we need, e.g. searchable, categorisable chats

- Very easy/fast/clean UI and UX

- Optional self-hosted version (no extra cost) if you’re concerned about security

- Won’t even start without a working ChatGPT / etc API key (good!)

Issues

- Templates/Variables currently broken in the web version

- Error messages for setting up your OpenAI key are wrong/misleading

- Seems to require “all permissions” on your OpenAI account (which it shouldn’t!)

Features

- Tags and folders for organizing your chats

- Searchable chats

- Fork conversations

- Import your existing chats from ChatGPT

- AI plugins (like OpenAI’s withdrawn ones) that work across any LLM

- Optional input via voice/speech (all platforms)

- * Offline / Run locally / Self-hosted versions available

- Share/export conversations nicely formatted

- Templates/Variables in prompts? BUG/BROKEN

- Token cost previews? YES (# and $)

- Supports API keys for LLM provider? YES

- Sensible Legal/Data/Security policies? YES / MOSTLY (see below)

Product Experience

Website drops you straight into an LLM chat – which is normal, except this one is a “buy once, never pay again” product, not SaaS, so I was expecting to be routed via a ‘download + install first’ or similar. Turns out: you buy once, and from then on can either use it online (via their website) or offline (via your local machine) … or even: self-host on your own website (for your private use, with them never seeing your chats). Nice.

…but as soon as we try to chat we get this reassuring box: ‘API key please’ (good!):

…except it immediately crashes, and accuses me of not having a paid account. Uh, no. Please debug and try again, guys (for the record: this is from a paid OpenAI / ChatGPT plus account):

… I suspect the reason is: that key has been granted limited access, to only the things it needs access to, and that TypingMind is aggressively trying to grab private data from the OpenAI account and being rejected. Reported to TypingMind support, will update when I get a reply.

However … the same error message also appears if you have PAID for ChatGPT but have NOT YET paid for OpenAI-API. The text is in there, somewhere, in that big red wall of text, but obscured by their obsession with “you don’t have to pay for ChatGPT Plus” – what they mean is:

“Your API key is incorrect or has not been enabled yet by OpenAI. There are multiple possible causes, please check:

- If you have a paid ChatGPT account this is NOT sufficient – OpenAI has separate billing for ChatGPT and the API

- If you have a paid API account but your $ balance has no credits it won’t work until you add a billing source and pre-pay some credits

- We require read/write access to all your private details on OpenAI – you need to give us an ‘all access’ API key”

Give it all the above (an API key … on a paid OpenAI-API account .. with credit balance … and full-access) and it works.

We can now use the tool … except all the features (even the most basic: showing the list of chats) are disabled. If you don’t purchase it, your experiment ends here. It’s a bit weird: a live “start using it immediately” that prevents you using the entire tool — but given the “pay once, NO subscription” it makes a little sense. What it does mean though is: you can go through this setup/onboarding stuff before you’ve paid a single cent, and I like that: more annoying to purchase something and only afterwards find it doesn’t work / requires other pay-for services you hadn’t realised it required. With OpenAI integrated we get a neat indicator in bottom left of screen:

… so let’s purchase it and try the paid Standard plan. Standard credit-card details etc etc all worked instantly, no problems.

Feature: Organizing chats

Creating a new ‘folder’/category is trivial, and then you drag/drop chats in the sidebar into the folders you want. All works as expected, clean and easy UI.

Feature: Searching chats

Start typing and it immediately gives live results highlighting where in the chat the text was found:

(here I’d created a folder/category “Test runs” and drag/dropped a few chats into it, so it’s showing both the containing folder/category and the chat title, and text. The title – “Greetings and Knowled…” – is OpenAI’s auto-generated title for that conversation.

Feature: Templates/Variables

Officially supported, but just plain doesn’t work. Nothing I did got this to work, and the total documentation on it is a single screenshot that cannot be reproduced.

My *guess* is that this feature only works in the standalone MacOS downloadable client app (although they claim it works on the browser version in their sales literature). Very disappointing – but I’ve reported to their support team, will update when I get a response.

Now onto some more advanced features…

Feature: token counts and controlling the LLM settings

Tap the model in the top left of chat window (common feature with most of these tools to select your LLM), and at the bottom you get that option “Model Settings’, giving some useful dynamic settings

… with these options:

… noting especially the settings around “Context limit”, and “Max tokens”.

Going back to the chat window, top right there’s an Info button, that brings up some previews we want:

… and after we enable all those “show tokens spent”, “show estimated tokens while typing”, we get this update to the chat interface: (note top right: “~8 tokens”):

… and in the top right of screen a very subtle but welcome cost estimator:

Feature: forking chats

This happens often when prompt engineering: you go down a conversational avenue that doesn’t quite work out, so you want to “rewind” a few steps, and try a different branch of conversation. Easy with humans; not so easy with LLM’s and their limited context-windows. Large context-windows may not fix this – may even make it worse.

But TypingMind supports this via “forking”:

… we fork at that point, and take a different route:

Your original conversation still exists, and the new one. They’re automatically duplicated/split in the sidebar:

… but: beware the costs. I only did a brief check on this, but it appears to be doing as expected: duplicating the full conversation with OpenAI. My OpenAI usage on this API key (only used for this one app) had a lot more context usage than I’d have expected from the basic conversation if it hadn’t duplicated the full context to create the forked chat:

Overall the costs tracking/estimation in TypingMind is a nice start, but it’s not really enough. Ideally it would provide a richer interface for this – e.g. desirable missing features could be:

- When you hit “Fork”, give a preview of how much it’s going to cost you to press that button

- A dashboard/overview screen showing where the costs are distributed across all your chats (instead of current: only shows within each chat), so you can understand and predict spending

Vidura – https://vidura.ai/

Note: despite seemingly unmaintained, Vidura appeared 3rd on my google search for prompt-management tools; there’s not much out there!

TL;DR

- Pure “prompt management” tool

- Simple clear UI albeit mediocre / MVP with obvious UX mistakes

- Focus on: becoming a new “fun” social network, donating your AI work (!) to others; enterprise users: look away now!

- Legals: completely missing

- Integration: doesn’t use your API keys, so it’s presumably rate-limited on their end, won’t have access to any paid/exclusive LLMs you use, and ultimately only for ‘toy’ projects

Issues

- non-standard account-creation system, with someone’s amateur attempt at forcing “strong passwords”; you’ll have to jump through a few hoops but eventually find one that it doesn’t arbitrarily reject.

- poor front-end coding – e.g. the output from the LLM/chatbot doesn’t scroll as it appears, you have to manually move the scrollbar

Features

- color-coding of the output, headings have colors (nice) instead of excessive use of BOLD text (as on OpenAI’s site)

- Templates/Variables in prompts? YES

Product Experience

Core interface doesn’t want you to use LLMs, it wants you to see their ‘leaderboard’ and re-use other people’s prompts. This product is aimed at non-serious users who are just randomly clicking on existing prompts to ‘see what happens’.

At first it seems you can’t do anything until you “create a category”.

…but creating a category has no effect. Nothing happens, page is the same as before you did that.

Turns out: actually you don’t need to create a category, the onboarding was misleading. It was already showing 15 arbitrary, empty, ‘private categories’ that you probably don’t want. You have to go through and delete them by hand – you can’t rename them, and until you remove them any useful categories you create (like the new one that seemingly disappeared) are created off the bottom of screen.

Renaming categories … simply does nothing. Button is there but code not implemented (MVP?).

Creating a prompt works fine. I copy/pasted a recent ChatGPT+ prompt in, it worked.

Templating: it lets you {{write variables}} and has a web-form at the bottom where overwrite the values. There is no history / record / dropdown / saving of variables – they are destroyed as soon as you change them. You can read your own history and scan through and copy/paste old versions back out.

History opens a separate tab. You lose the color-coding :(, but you get an easy side-by-side of “text I entered” vs “output I received” (with the original OpenAI formatting intact).

Legals, Data, and Integration

I don’t know what it’s doing with my content – it didn’t ask for sign-in to OpenAI.com — have they opted-out of OpenAI saving (and storing, selling, using) my prompts? Where is my data going/gone? No answer given.

There is no information about legality, ownership, how it works, etc. Once you’re logged in you can’t even see the main site (need to dig out a URL or re-google the product).

They heavily promote “we’re on ProductHunt” but they launched almost a year ago with no updates and only 8 votes. Seems unmaintained.

Weirdnesses

It expresses judgement on how good your prompts are, with zero input from you (and it’s … very wrong). That red dot is declaring a “bad prompt” – but it was actually an excellent one, gave extremely good results.

You can edit … the output … that came from the LLM. You can make it look like ChatGPT said something it didn’t say. There’s presumably some use for this. But it seems odd to me.

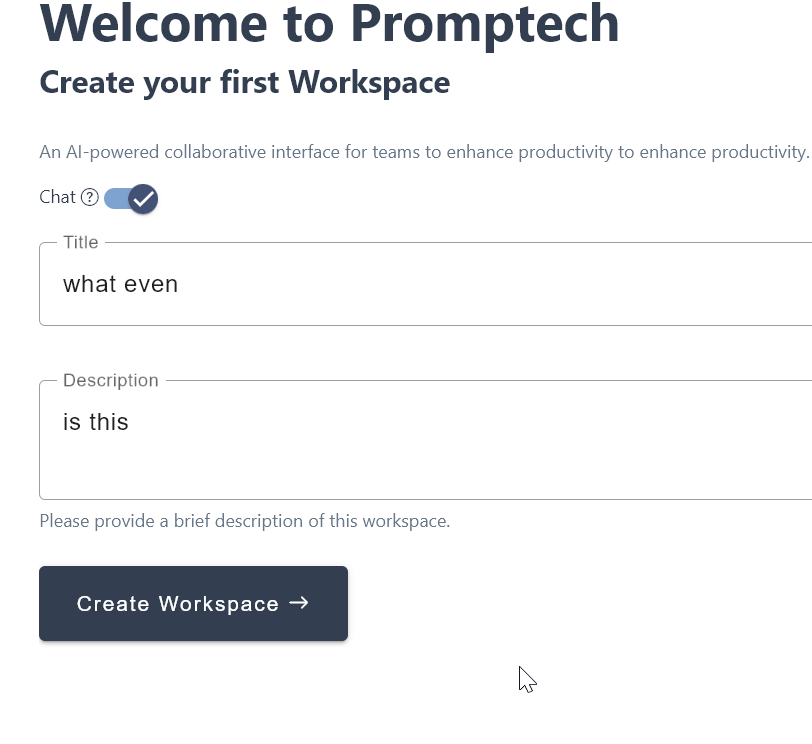

PrompTech – https://app.promptech.ai/

TL;DR

- 50% a mediocre Kanban-board

- 50% a “prompt management” tool

- Maybe great if you want to give demos to Product Managers who are too terrified to use actual, real, ChatGPT?

Issues

- I hate it. As they say: “they were so busy asking ‘can we?’ that they never stopped to question: ‘should we, tho?'”

- Doesn’t work with API keys; instead charges you to re-sell API credits (but seemingly at a loss? Unless you buy multiple seats, where OpenAI would pool your credits, and this product would not, thereby charging you a lot more)

Features

- If you really want a KanBan, and don’t want to use a modern standard product like ProductBoard … this could be the tool for you!

Product Experience

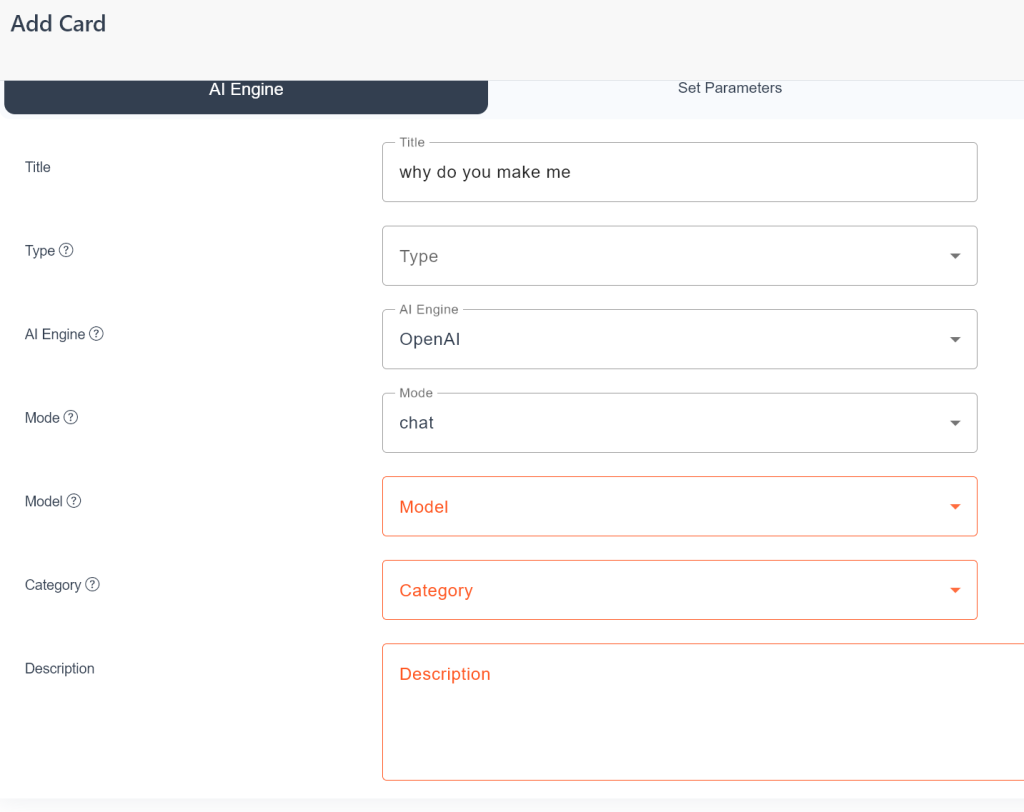

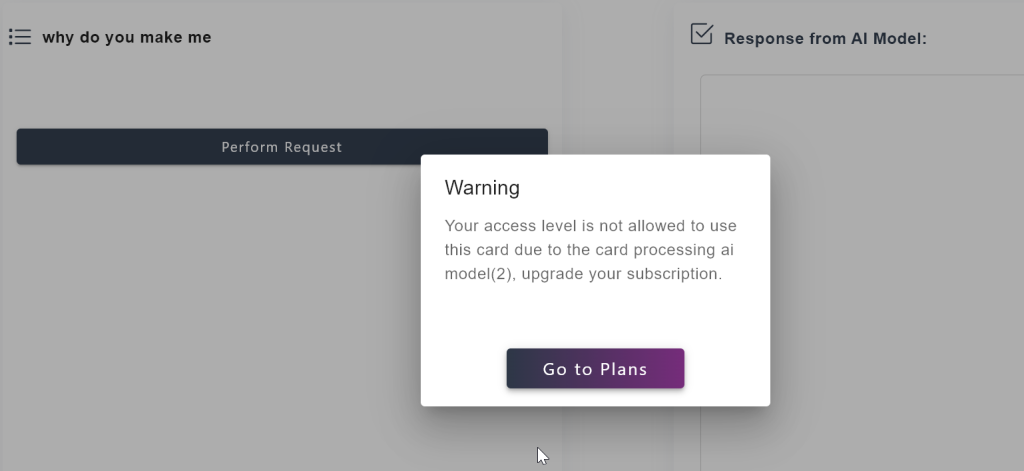

After some confusion about the name (Promp Tech? Prompt Ech? …?) it stumbles out the gate with an unexplained compulsory dialog:

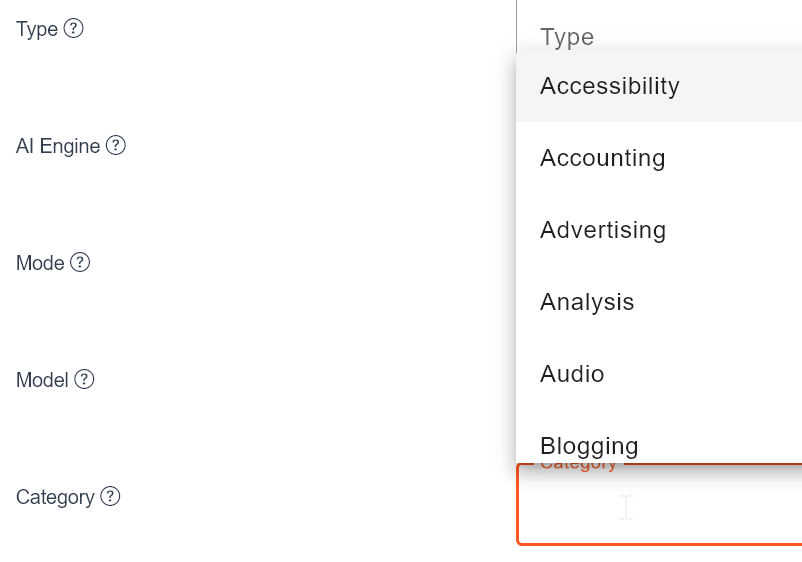

… then explodes into your brain with 3 PAGES of compulsory options, none of which you care about or need. Note: every field on this screen is compulsory … and it’s only page 1. By the way – this isn’t to let you run a prompt – oh, no, this is to allow you to DOCUMENT that you’re INTENDING to write a prompt. Joy!

…and then really hits its stride by demanding a ‘Category’ from a list (that’s compulsory) with no explanation. Does it matter? Do you care?

Now we can run a prompt, right? Right? … Ah, wait, first you have to confirm that you’re not going to change any of the things that you obviously weren’t going to change …

… and NOW we can run a prompt.

Nope. We’ve created a ‘Card’, but we have to go somewhere else to ‘perform’ (their word!) it. Uhhh. OK.

OK. Fine. I’ll bite: let’s go ‘perform a card!’

Hahahahahaha.

Those “Plans” are: $10/month/person to … not use your API key, but instead buy Tokens off these guys. This is where it gets more interesting:

- OpenAI API cost: $30 / 1 million tokens

- Promptech API cost: $10 / 0.5 million tokens (ChatGPT only)

… so … Promptech is … paying out $30 for every $20 of revenue they receive? That’s worrying for a business model.

Vaporware – watch this space

These are tools/apps/services that currently don’t exist but we believe will “probably” launch/re-launch this year. Exciting tools are frequently announced – but until they’re live and available they’re no use to us; if you’re a tool author: hurry up and launch! 🙂

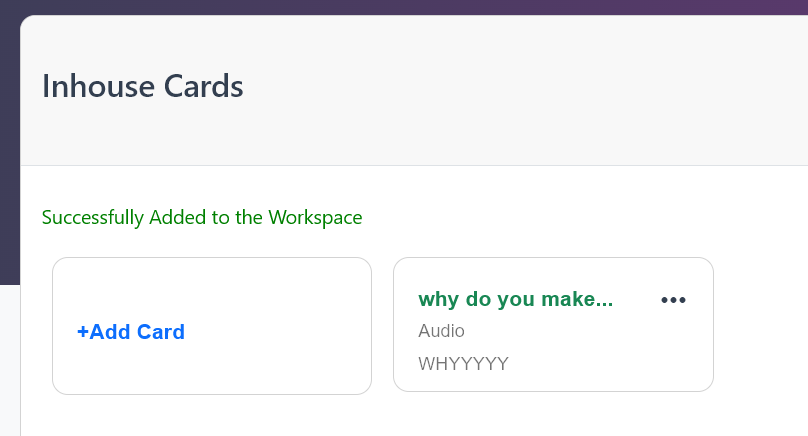

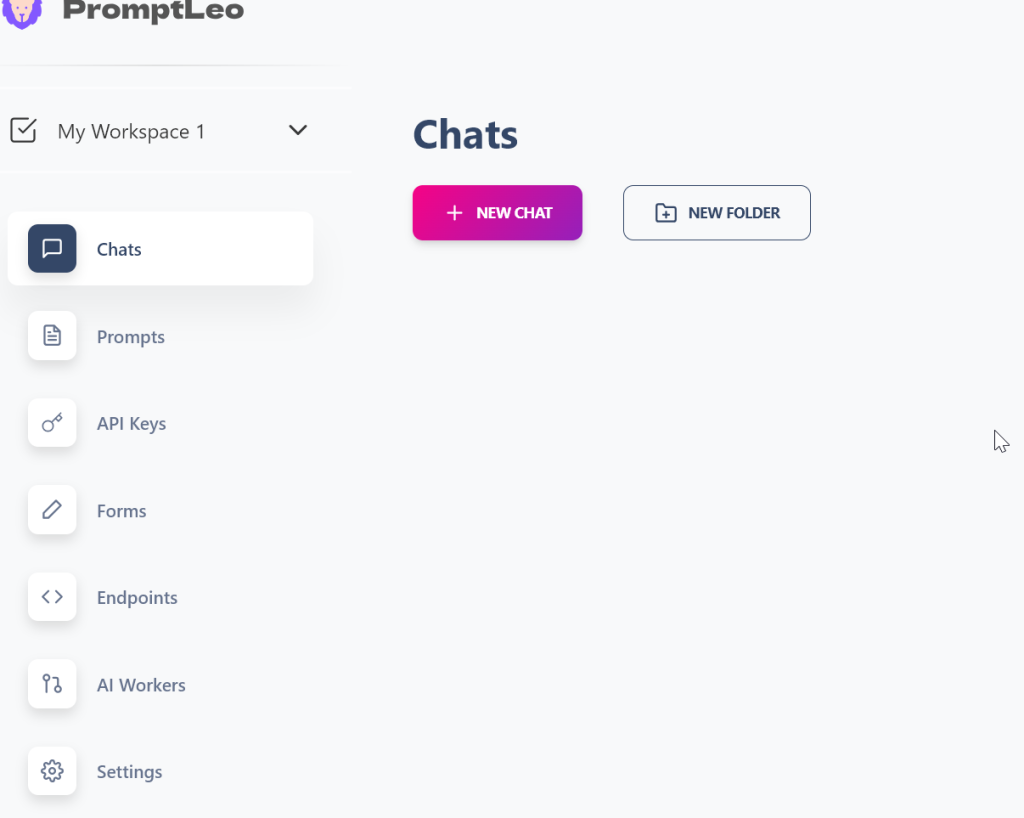

PromptLEO – https://promptleo.com/

TL;DR

- Pure “prompt management” tool

- Beta/unfinished – doesn’t really work yet

- A little surprisingly: they’re asking for payment already (‘trial ends after 14 days’)

- Simple clear UI with nice ‘modern’ theme

- Has potential: c.f. the live token counters

Issues

- Templates/variables: placeholders exist, but they do nothing

- Weird typing bugs in their form fields

- No error detection/handling – silently fails/crashes

Features

- Templates/variables? BROKEN/BUG

- Token cost previews? YES (# only, no $)

Product Experience

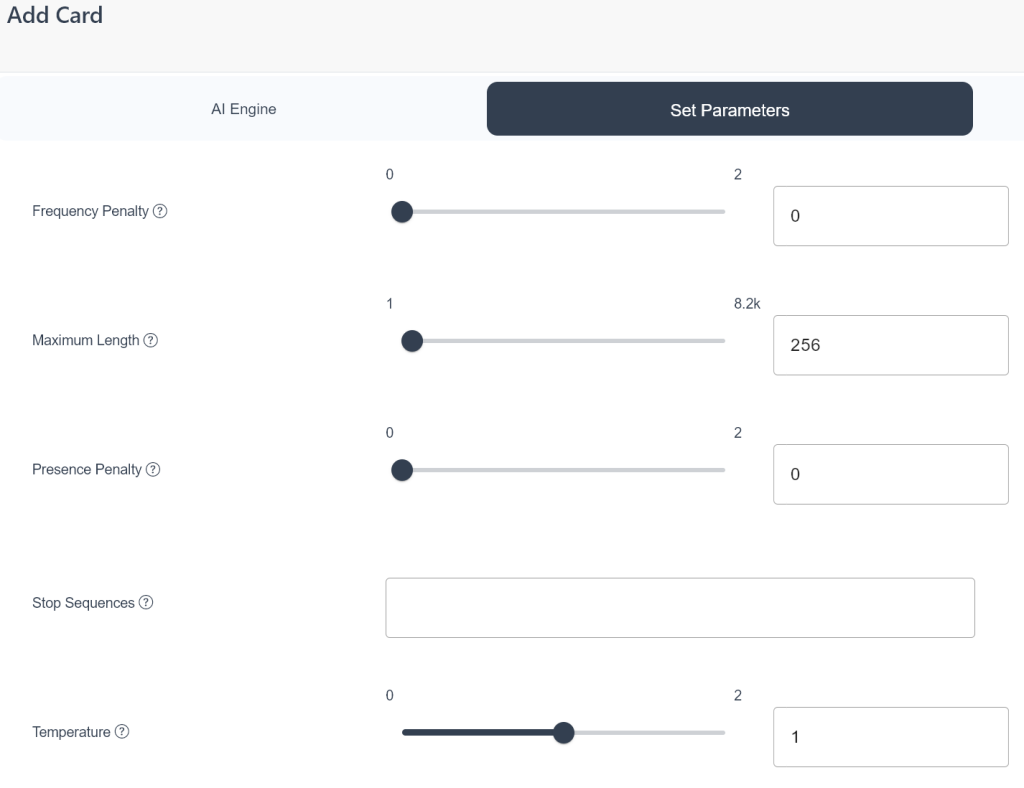

The main screen has a nice simple layout, your main items (and no clutter) down the side:

…but it feels incomplete / beta release throughout. E.g. the forms have weird bugs in their CSS/JS:

…and e.g. when you first try to use it, it silently crashes if you haven’t already supplied an API key (it never prompts you for this, it just … does nothing):

(I left that for a few minutes; nothing happened)

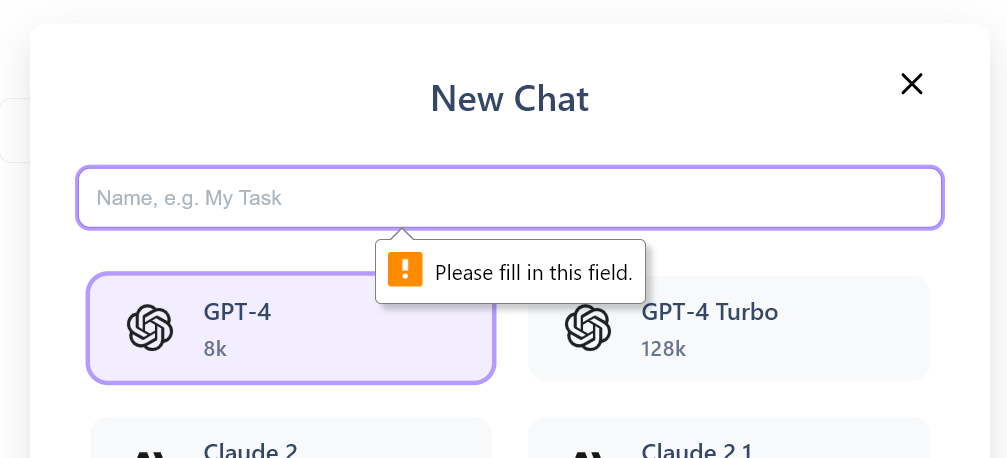

The flow is also a bit annoying; you’re not allowed to just “use” it, first you have to jump through hoops and “choose a title for the chat you haven’t started yet”:

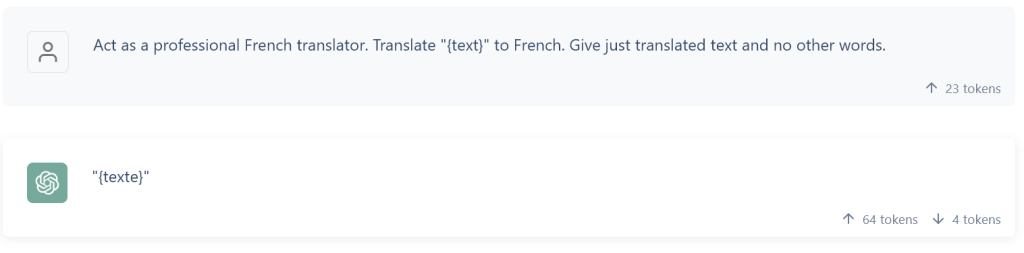

…and templates don’t work / don’t do anything (even using the built-in example):

(that “translate {text} to French” was pre-provided by PromptLEO – but no UI exists to fill in the variable)

PromptHub – https://www.prompthub.us/

TL;DR

- Not live, but lots of hope/press for it

Issues / Features

- N/A

Not available, not letting anyone use it. Several people recommended this to me – none of them had used it, had only ‘heard of it’. Some blog posts suggest it may previously have been available, then was too much demand, so taken offline for a rebuild.

For now: their site says they have over 2,000 people in waitist – get in line. Not much we can do here…

Vellum – https://www.vellum.ai/

TL;DR

- Not live (see image below)

Issues / Features

- N/A

Product Experience

Apparently not a product yet, still in design. The website has only a “Request Demo” button, there’s no product / download / service / software:

HumanLoop – https://humanloop.com/

TL;DR

- Gmail user? BANNED!

Issues

- Pricing page but no price, and no feature details – it’s “Custom”

Features

- N/A

Product Experience

Appears to be another “not finished / not ready to release” product, given the lack of detail on the website. I reached out to their support (via the chatbot/Intercom on their website) and had no response 16 hours later (with a quoted ETA for response of 12 hours). Combined with the ban on Google email accounts I gave up.

Vaporware – DOA

These are tools/apps/services that appear dead-ended: e.g. unmaintained, e.g. authors have lost interest, e.g. never launched, etc.

Promptly – https://www.promptlyhq.io/

No longer exists:

PRST.ai – https://prst.ai/

Broken “download” button that literally does nothing; broken JavaScript? Hmm.

AI Prompt Manager – https://aipromptmanager.com.au/

TL;DR

- Chrome plugin, thin wrapper on ChatGPT’s web experience, adds very little

Issues

- Random-developer Chrome plugin with a total of 5 ratings and 116 users (i.e. dangerous / untrustworthy; Google has a poor history of allowing plugins that contain viruses/malware; install at your own risk!)

Features

- Website: a large list of 3rd party GPTs

- Plugin: none really, a few things that already exist in ChatGPT, but this moves the buttons somewhere more visible

Product Experience

The extension itself seems to be someone’s ‘practising using the ChatGPT SDK’, adding buttons to ChatGPT itself that already exist, but making them easier to find.

They have a website … which is a list of public GPT’s, no further info.